Project #8 - Data Portrait

Objective

Create a data portrait out of a personal habit of yours.

Submission

My data portrait is a simple representation of the types of content I

saved onto the platform, Are.na, over the past 12 days.

Reflection

At the beginning of the exercise, I knew that I didn't want to manually

create a spreadsheet or manually translate the data from .csv to json. I

wrote a python script to help me translate my raw data (my natural

interactions with the platform, Are.na) into spreadsheet and csv format.

I did this by accessing the Are.na API and scraping the content from

Are.na, then pushing that content into a spreadsheet. Code for that is

below:

#program to create csv from arena api scrape for my recent channel adds

from arena import Arena

import time

#api data

my_access_token = "REDACTED"

arena = Arena(my_access_token)

user = arena.users.user('z-ai')

#list all blocks to scrape

blocks = ["9679315", "9679246", "9651385", "9644289", "9633280",

"9626461", "9625940", "9625054", "9621997", "9621939", "9621905",

"9612164", "9609724", "9607341", "9602743", "9592700", "9592679",

"9591470", "9587335", "9578941", "9566487", "9566485", "9566481",

"9566455", "9552489", "9552471", "9552262", "9552260", "9550685",

"9543588", "9546276"]

#create arrays to store data

days = []

block_ids = []

titles = []

images = []

block_types = []

sources = []

channels = []

channel_types = []

#iterate thru blocks

for block in blocks:

#assign data variables

new_block = arena.blocks.block(block)

block_id = new_block.id

title = str(new_block.title)

content = str(new_block.content)

day = str(new_block.updated_at[8:10])

block_type = str(new_block.base_class)

channel = str(new_block.connections[0]["title"])

channel_type_split =

str(new_block.connections[0]["title"].strip("[").split("]"))

result = channel_type_split[1:len(channel_type_split)-1].split(", ")

channel_type = result[0]

if new_block.title == "":

if new_block.content == "":

title = "Untitled"

else:

title = content

else:

title = new_block.title

if new_block.source:

source = str(new_block.source["url"])

else:

source = "No URL"

if new_block.image:

image = str(new_block.image["display"]["url"])

else:

image = "No Image"

#append data into arrays

days.append(day)

block_ids.append(block_id)

titles.append(title)

images.append(image)

block_types.append(block_type)

sources.append(source)

channels.append(channel)

channel_types.append(channel_type)

#prevent blockage by setting time breaks

time.sleep(0.25)

import csv

import json

#create new csv and write

with open('arena.csv', 'w') as arena_file:

#create headers in csv

fieldnames = ['Day', 'ID', 'Title', 'Image', 'Block Type', 'Source',

'Channel', 'Channel Type']

arena_writer = csv.DictWriter(arena_file, fieldnames=fieldnames)

arena_writer.writeheader()

#store data under each header column in csv

for (the_day, the_block_id, the_title, the_image, the_block_type,

the_source, the_channel, the_channel_type) in zip(days, block_ids,

titles, images, block_types, sources, channels, channel_types):

arena_writer.writerow({fieldnames[0]: the_day, fieldnames[1]:

the_block_id, fieldnames[2]: the_title, fieldnames[3]: the_image,

fieldnames[4]: the_block_type, fieldnames[5]: the_source, fieldnames[6]:

the_channel, fieldnames[7]: the_channel_type})

#csv to json

import csv

import json

# new function, args are exiting csv file and future json file

def make_json(csvFilePath, jsonFilePath):

# new dictionary to store data

data = {}

# read csv with open(csvFilePath, encoding='utf-8') as csvf:

csvReader = csv.DictReader(csvf)

# each row turned into dictionary and is added to data dictionary

for rows in csvReader:

# 1st column named 'No' (number) will be the the primary key

key = rows['No']

data[key] = rows

# open json writer, and use the json.dumps() function to dump data

with open(jsonFilePath, 'w', encoding='utf-8') as jsonf:

jsonf.write(json.dumps(data, indent=4))

# declare fill paths

csvFilePath = r'arena-updated-2.csv'

jsonFilePath = r'arena-4.json'

# call json function

make_json(csvFilePath, jsonFilePath)

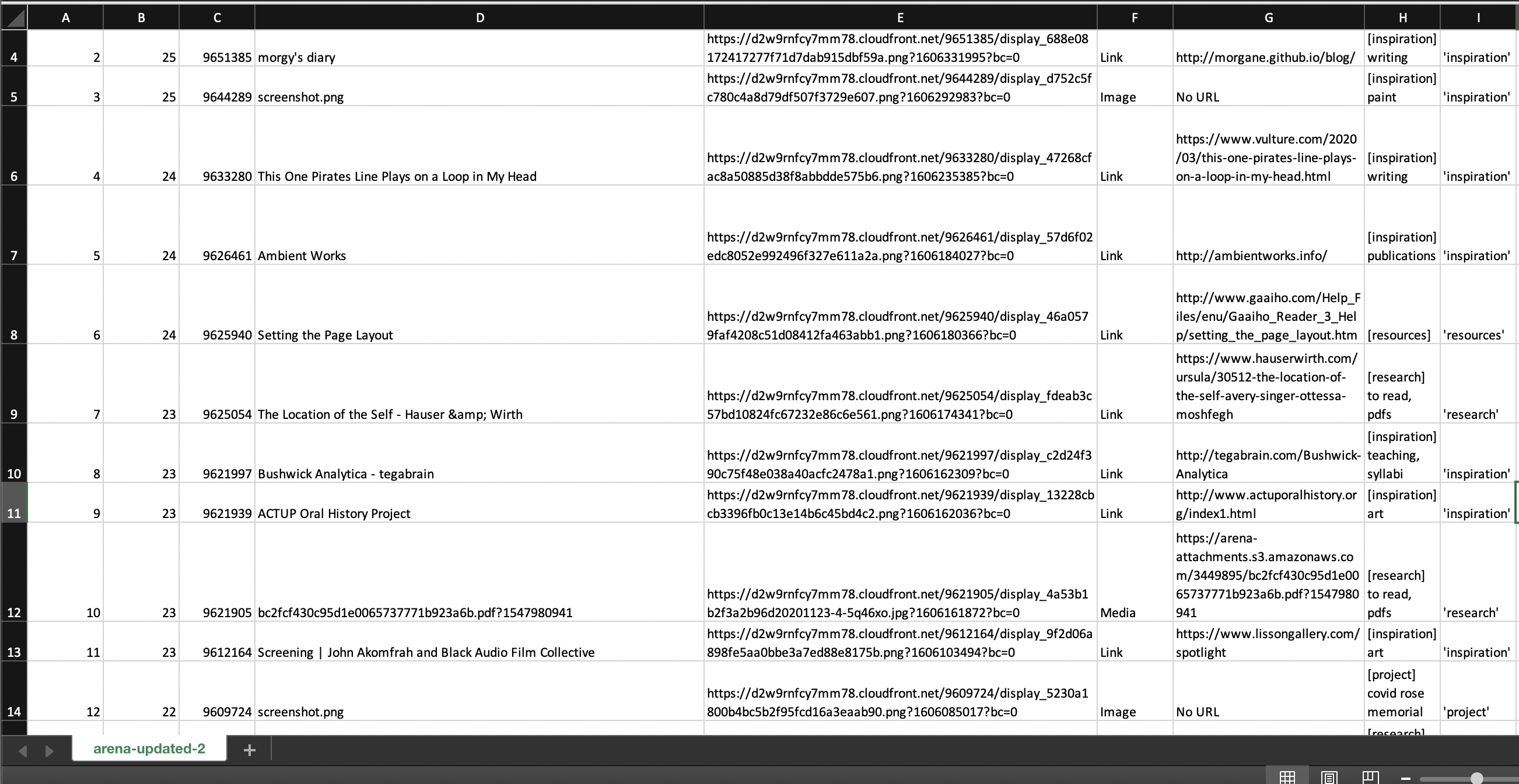

This code produced the following spreadsheet:

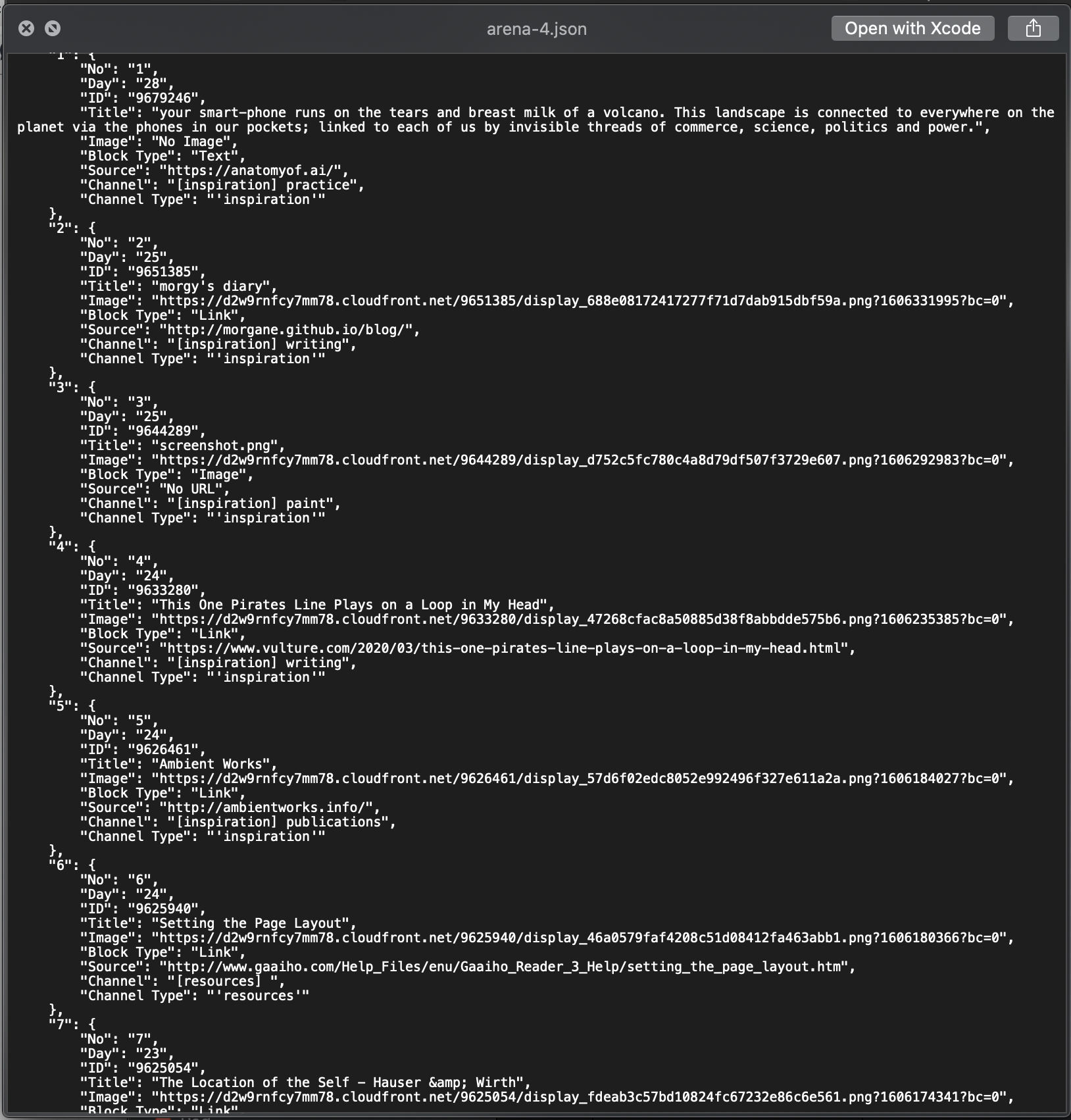

This code also produced the following JSON file: